A focus on innovation and creativity is ever-present in our work. One of the more prominent examples of that is our annual hackathon, which gives us a chance to fuel up on pizza and flex our coding muscles in a 24-hour programming marathon. Up until this year, these hackathons were limited to a business track competition, the purpose of which was to develop blueprints for new features and products. This last time, however, we introduced a “madness” track—a free-for-all in which anything goes, the only limit is how far we could stretch our imaginations.

As security researchers who enjoy the view outside of the box, we were inspired by a TED Talk called “Can We Create New Senses for Humans.” In it, presenter Dr. David Eagleman discusses the ways in which technology can change sensory perceptions and evolve the way we experience our reality. Applying this concept to our craft, we asked ourselves: what if we could listen in on network traffic instead of just looking at it on graphs? This was the seed of an exciting idea that got us looking into how data is converted into sound—a process called sonification.

Sonification 101

Sonification has been around since the turn of the 20th century and the creation of the Geiger counter. It’s been applied in many different ways, including medical devices that use sound to monitor health, auditory displays in airplanes and sonar to help submarines navigate under water.

Auditory perception, we learned, has a lot of advantages oversight, especially in terms of processing spatial, temporal and volumetric information. The ability to register the most delicate differences in frequency resolution and amplitude opens up a Pandora’s Box worth of possibilities in data perception.

In his TED Talk, Eagleman describes how “our visual systems are good at detecting blobs and edges. But they are really bad at… screens with lots and lots of data. We have to crawl that with our attentional systems.” As we dove deeper into our research, we found that his theories are backed up by additional academic evidence that highlights how sonification “is an effective method of presenting information for monitoring as a secondary task.” Furthermore, experiments show how “participants performed significantly better in [a] secondary monitoring task using the continuous sonification.”

Note: All of the scripts used for this project are available as open sourced code in this GitHub repository, to be used under the MIT license.

This was the value proposition we were looking for. Now we just had to figure out how to make the internet sing.

The Sounds of Data

The best and most obvious way to execute our project was to create sounds out of NetFlow logs. To do so, we developed a Python 3 script that collected NetFlow data, which was then processed into OSC (Open Sound Control) messages. To convert the messages into sound, we used a Sonic Pi—a Ruby-based algorithmic synthesizer built to engage computing students through music. Purposefully built for live audio synthesis, Sonic Pi came equipped with a very reliable timing model, making it the perfect tool for our purposes.

In the DIY spirit of the hackathon, we opted to run Sonic Pi on a Raspberry Pi. The result turned out like this: Turning web traffic into sound

Turning web traffic into sound

Next, we mapped different traffic types according to individual instruments to make the outcome more melodic.

| Traffic type from NetFlow | Instrument |

| udp_pps | Violas sus |

| udp_bw | 1st Violins |

| icmp_pps | Horn |

| Icmp_bw | Harp |

| tcp_pps | Timpani |

| tcp_bw | Xylophone |

Traffic types were assigned to different instruments.

We also used shifts in volume to show increases and decreases in traffic levels. This way, for example, an increase in pitch and volume would alert us to significant traffic build-ups, as in the case of a DDoS attack. Finally, just because we could, we decided to transmit the whole thing over an internet radio. As we did, we found out that the sound of traffic was surprisingly pleasant to the ear.

Judge for yourself with the video below.

https://www.youtube.com/watch?v=EKVJjfdVolc

Tomato Panic Button

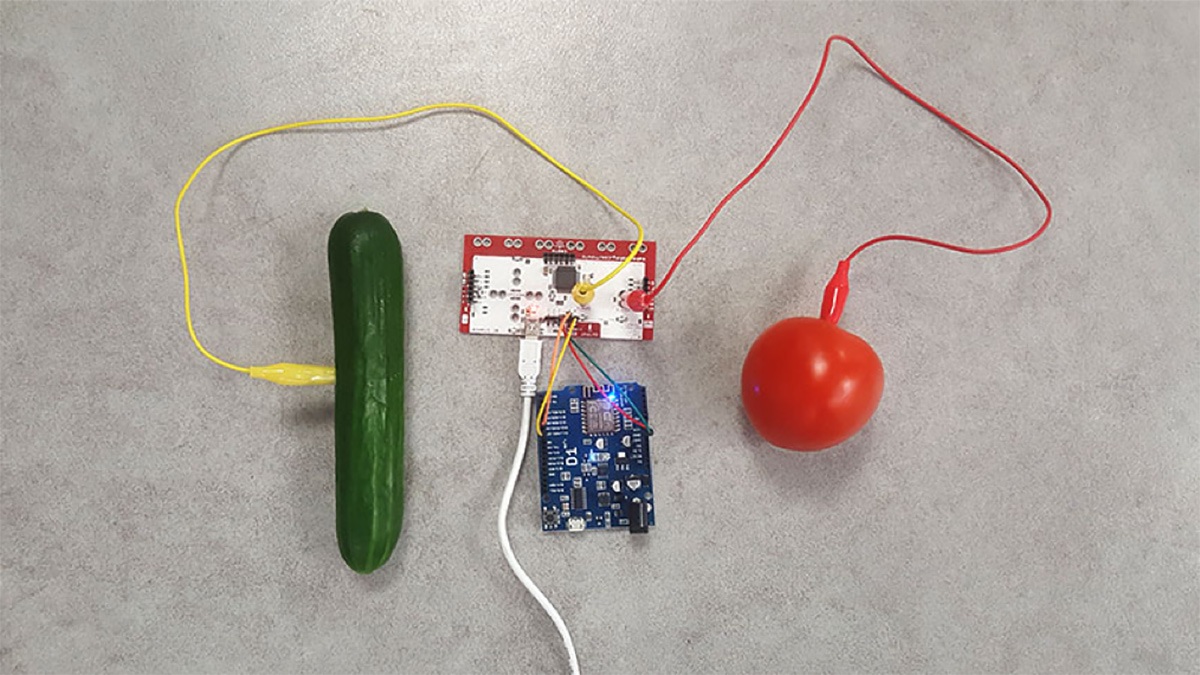

Naturally, it wouldn’t be a proper madness track project without us going a bit overboard. Which is why, when it came to creating the response mechanism to a DDoS assault, we immediately focused the untapped mitigation potential of garden-fresh veggies. The idea was to develop a system that required its operator to squeeze a tomato at the sound of a DDoS attack to activate the mitigation service. And once mitigation was complete, the operator would squeeze a cucumber to signal the all clear. (Care was taken with the tomato, it being the more delicate of the two. No vegetables were harmed in the making of this system.)

For this to work, we connected a tomato and a cucumber to a Wemos D1, a small electronic board with an ESP8266 WiFi microprocessor, and a Makey Makey invention kit. The result was this (ridiculous, but) healthy looking setup:

Wemos, Makey Makey, and the Tomato Panic Button

I think we can confidently say this was the first time a tomato has been used in DDoS mitigation. No less important, we’re fairly certain that this was the first time that Wemos or similar technologies, (e.g. Arduino) have been used to interact with a Sonic Pi, which was sort of the whole point.

Not Just Fun and Games

As we were working on this project, we couldn’t help but think that ‘sonifying’ attack alerts using high and low frequencies could play an actual role in the future of security monitoring. While vegetable-based DDoS mitigation probably won’t catch on, the idea of receiving lower-priority information through sound has a lot of validity and will likely attract further research. Sound already plays an important part in other alerting mechanisms, so it’s curious that it isn’t as commonplace in security monitoring. As the SIEM industry is looking for new ways to tackle the growing issue of information overload, expanding the sensory array could be an idea worth exploring.

Try Imperva for Free

Protect your business for 30 days on Imperva.