As Incapsula’s prior annual reports have shown, bots are the Internet’s silent majority. Behind the scenes, billions of these software agents shape our web experience by influencing the way we learn, trade, work, let loose, and interact with each other online.

However, bots are also often also designed for mischief. In fact, many of them are used for some kind of malicious activity including mass-scale hack attacks, DDoS floods, spam schemes, and click fraud campaigns.

For the third year running Incapsula is publishing our annual Bot Traffic Report- a statistical study examining the typically-transparent flow of bot traffic on the web. This year we build upon our previous findings to report year-to-year bot traffic trends. We also dig deeper into Incapsula’s database to reveal an even more substantial data sample, thereby providing new insights into bot activity.

Methodology

The data presented herein is based on a sample of over 15 billion human and bot visits occurring over a 90-day period, from August 2, 2014 to October 30, 2014. It was collected from 20,000 Incapsula-protected websites having a minimum daily traffic count of at least 10 human visitors.

Geographically, the observed traffic includes all of the world’s 249 countries, territories, or areas of geographical interest (per codes provided by an ISO 3166-1 standard).

The analysis was powered by the Incapsula Client Classification engine; a proprietary technology relying on cross-verification of such factors as HTTP fingerprint, IP address origin, and behavioral patterns to identify and classify incoming web traffic. Our Client Classification engine is deployed on all Incapsula-protected websites as part of the company’s multi-tier security solution.

Report Highlights

Signs of the Slow Demise of RSS in Good Bot Traffic

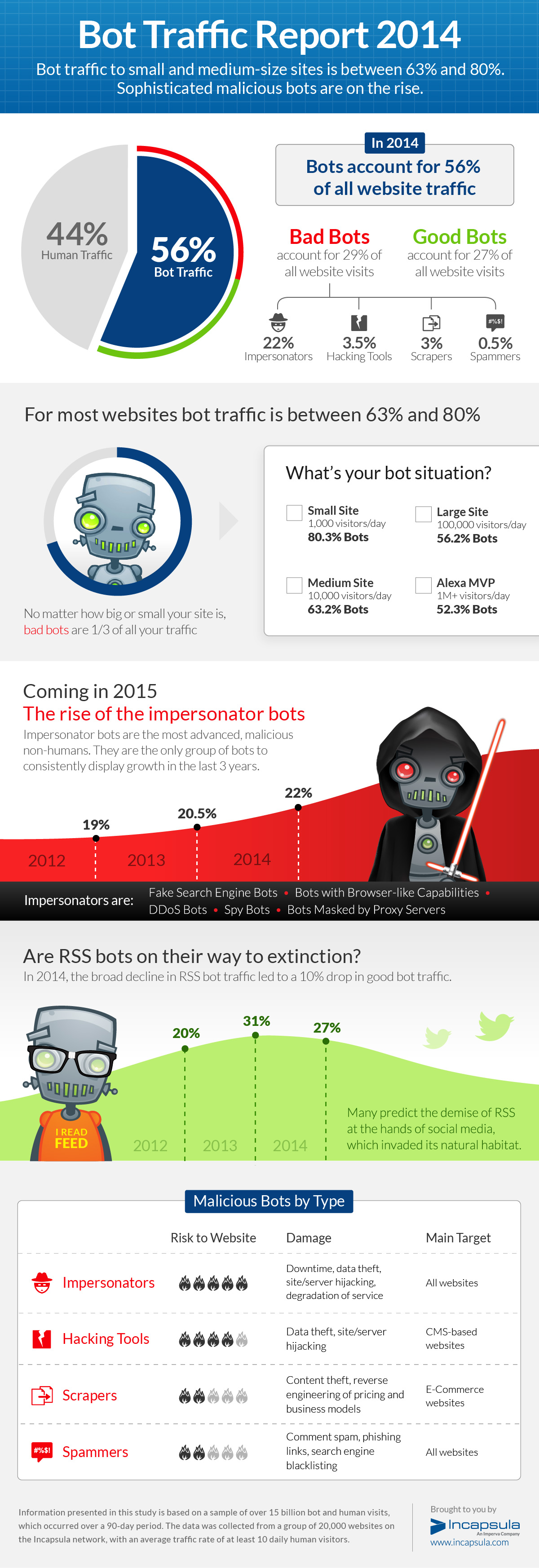

In our last report, covering the period from August 2, 2013 to October 30, 2013, bots accounted for over 60 percent of all traffic flowing through Incapsula-protected websites. In the period covered by this report, bot traffic volumes decreased to 56 percent of all web visits; a reversal of the upward trend we’ve observed the past two years.

In trying to understand the decline in bots, we noticed that the bulk of the decline reflects a drop in so-called good bot activity. Specifically, these bots are associated with RSS services. Our analysts’ initial assumption was that the shift was related to the Google Reader service shutdown.

Upon further inspection we saw that the Feedfetcher bot—associated with the Google Reader service was still as active as ever, while the decline in RSS bot activity was across the board. This broad downward trend is RSS bot activity the main reason for the approximately 10 percent drop in good bot activity and is another indication of the slow demise of RSS services.

Bot Traffic to Smaller Websites Climbs Above 80%

On July 24, 2014, Incapsula published an in-depth analysis of Googlebot activity. It showed that Googlebots do not play favorites; they crawl both small and large websites in a pre-determined frequency, regardless of any website’s actual popularity.

This left us wondering if all other inhuman agents are also as ‘hype-immune.’

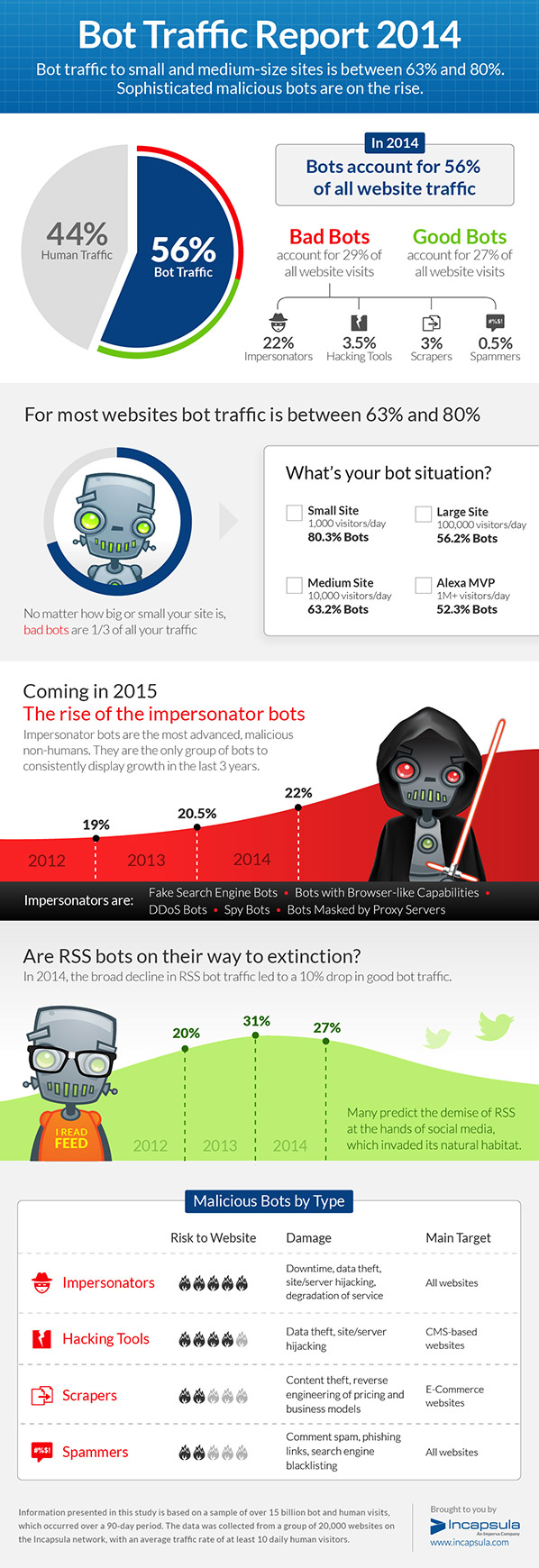

To put this idea to the test, we split our sample group into four subcategories, based on the number of daily human visitors. What we learned is that smaller websites tend to get a higher percentage of bot visitors, where such access accounts for approximately 60 percent to 80 percent of all traffic.

This raises interesting questions about the realities of an average website owner.

It’s difficult to say what the absolute average number of daily website visitors is, but during the period of this report, most websites got less than 10,000 visits a day. So, while bot traffic to larger websites is at roughly 50 percent, smaller and medium websites which represent the bulk of the Internet are actually serving two to four bot sessions for every human visitor.

This data should also interest all web hosts who cater to sites in this category. For one, they should ask themselves, how are bots affecting their bandwidth consumption and ‘consequently’ their bottom line. In is safe to assume that there is money to be saved by promoting better bot filtering practices, not to mention the added benefits of hardened client site security.

Bad Bots Threaten Big and Small Sites Alike

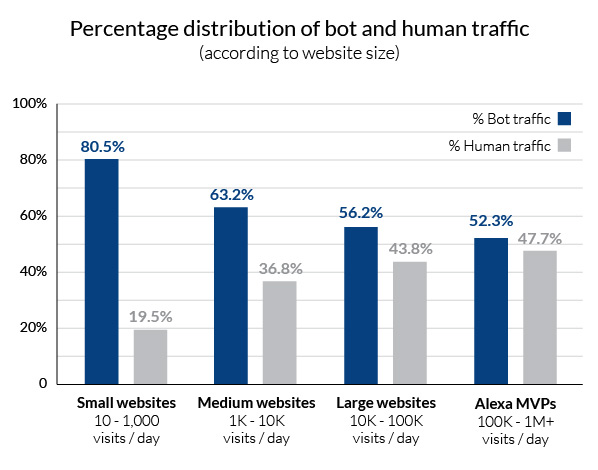

Drilling deeper, we wanted to determine if any given website group was more exposed to bot-enabled cyber-attacks during the period of this report. As it turns out, malicious bots pose a categorical threat to all websites, regardless of size.

As evidenced by the next graph, the average percentage of bad bots is consistently hovering around the 30 percent mark, regardless of website size or popularity. In absolute terms, Incapsula believes that malicious bot traffic grows generally in proportion to a site’s human traffic.

While larger sites are assaulted by many more malicious bots, the overall risk factor is the same for every website owner; roughly one in three visitors is a malicious agent.

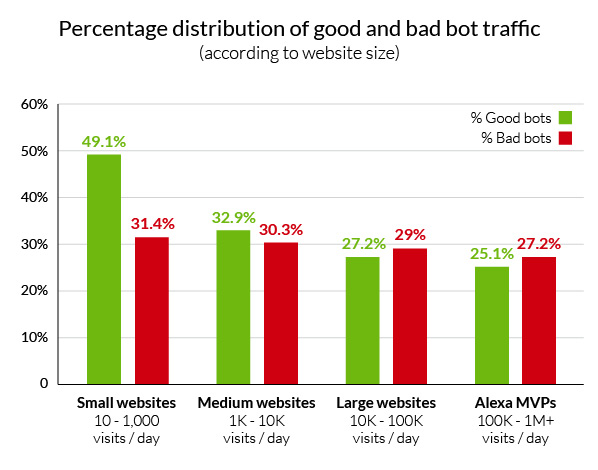

Impersonator Bots Are Taking the Field

In our previous report, we discussed the dangers of so-called impersonator bots encompassing the more advanced, malevolent intruders engineered to circumvent common security measures. This list includes: DDoS bots having browser-like characteristics, rogue bots masked by proxy servers, and still others attempting to masquerade as accepted search engine crawlers. The common denominator remains the same: these are the works of more capable hackers experienced enough to use their own tools, be it a modified versions of existing malware kits or new scripts coded from scratch.

This is the only bot category displaying consistent growth for the third year running. While total bot activity has dropped by 10 percent from last year’s report period, impersonator traffic volumes continued to grow, increasing to 22 percent, which is nearly a 10 percent increase from the period covered by our last year’s report.

These numbers confirm what many security experts already know: hacker tools are increasingly being designed for stealth.

We have plenty of examples. For instance, Google recently revealed that new technology is able resolve CAPTCHAs with ~90 percent accuracy. Incapsula’s own report from March 26, 2014 showed that 30 percent of DDoS bots are able to retain cookies, thereby avoiding common cookie-based security challenges.

DDoS impersonator, masked as an IE, passes JS/cookie challenges but is still blocked by Incapsula.

As malicious bots evolve, Incapsula is driven to think beyond common signature-based and challenge-based security measures. This is why, in 2014, our bot filtering began to increasingly rely on reputation and behavioral analysis. Today, our bot identification process not only asks, ‘Who are you?’ but also attempts to evaluate, ‘Why are you here?’

Our analysts know that bots can falsify their identities, but not their intentions. By examining the context of website visits, Incapsula’s goal is to stay ahead of the evolving bot threat and to thwart the large number of malicious impersonators.

Follow our blog as we continue to keep an eye on bots activities, discuss bot filtering techniques and provide periodic updates on bot attacks and bot behaviors. For those interested in learning how to identify bots on their website, see our companion blog on that topic.

Want to learn more about bots?

Visit these links to learn more about:

- What is an Internet bot

- What is a botnet

- Our bot filtering process

- Fake Googlebot attacks

- The most active good crawlers

Try Imperva for Free

Protect your business for 30 days on Imperva.