From the news headlines, we know that data breaches are on the rise – both in frequency and scale. While this reality is unsettling, it’s not surprising. That is because the volume of data being collected and stored by organizations continues to grow exponentially each year. Every day, the global population creates 2.5 quintillion bytes of data, and some estimates state that by the end of 2022, 97 zettabytes (one zettabyte is one trillion gigabytes) of data will be created, captured, copied, and consumed worldwide.

This data is valuable and, therefore, attractive to cybercriminals to steal or manipulate to conduct fraud, sell on, or hold for ransom. Organizations are well aware of the threats to data and invest heavily in cybersecurity measures. However, despite this, data breaches continue to occur. To understand why we need to look at how the data landscape has changed in the last decade and how that has made traditional cybersecurity frameworks and playbooks obsolete.

The data landscape pre-2010

Before 2010 and the mass internet adoption spurred on by the invention of the smartphone, organizations had a relatively well-defined and controlled footprint of people, processes, and technology that were used to capture, process, and store data.

At that time, data was still largely collected using paper. This data was generally provided to a handful of employees (i.e. Data Entry Operators) who had been strongly verified by the organization. They entered this data into monolithic and green screen applications, which stored the data in a handful of enterprise databases that were available at the time. All this was largely run within the organization’s own data centers, under the control of their IT and security teams.

The data landscape post-2010

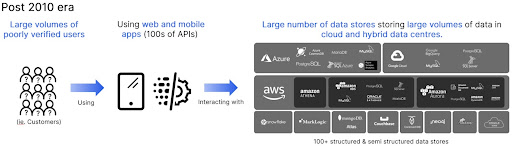

Mass adoption of the internet and the digitization of the economy since 2010 have seen a significant shift in our data practices and processes.

Today we have hundreds or even thousands of people (ie. customers), entering data via web and mobile apps. These users are poorly verified, often requiring as little as an email address to get access. Gone are the centralized monolithic and green screen applications, replaced by web and mobile apps that typically use hundreds of APIs. This data is stored in one of 100+ structured and semi-structured data stores. These applications and data workloads run in various data center environments (e.g. on-premise, cloud, hybrid).

All this creates a highly complex modern data landscape that is challenging to secure for even the most mature cyber and data protection professionals. At the same time, it is creating more opportunities for cybercriminals to access this data because

Organizations are investing in various controls to overcome some of these issues, but there are still a lot of gaps.

For example, Data Loss Prevention (DLP) focuses on internal rather than external users. Identity & Access Management and Encryption can’t protect against insider threats or stolen credentials. Network and endpoint controls aren’t effective at the data layer.

This is why organizations need to move away from traditional cybersecurity frameworks made obsolete by the modern data landscape to adopt a data-first cybersecurity approach.

Here are three key areas of focus when developing a data-centric security strategy.

-

Protect the gateways to data stores

Organizations first need to take greater care in securing the gateways to their data stores – namely their application and APIs. This includes using a proven WAAP solution that leverages machine learning to minimize false positives and remains highly effective at countering zero-day attacks. The WAAP should have a strong API security focus that extends the monitoring, detection, and prevention of API abuse to the data layer. It should also protect against account takeover attacks as well as client-side attacks that hijack form data. The gold standard would see the addition of Runtime Application Self Protection (RASP) to secure the App/API from within its runtime environment to defend against zero-day and software supply chain attacks.

-

Protect the data itself

Once these Apps and APIs are better secured, organizations need to look at securing the data layer with data-centric controls. These controls should be applied across the different data types (structured, semi-structured, and unstructured) sitting in different environments (on-premises, cloud, and hybrid).

Key data-centric security controls

- Data Activity Monitoring – Granular monitoring of access to data stores.

- Data Access Risk Analytics – Use AI/ML and behavior analytics to identify anomalous and risky data access behavior.

- Data Discovery & Classification – Understand the assets where the largest risks lie with respect to storing PII and other sensitive data.

- Data Access Control – Continuously review data store access privileges and work towards the ‘least privileged’ model.

- Data Encryption – Encrypt data at rest and in transit to prevent eavesdropping.

- Data Tokenization – Substitute sensitive data with non-sensitive data for use in non-production and QA environments.

- Data Masking – Mask views of data to meet data security and privacy. Tokenization and masking usually work together.

- Data Loss Prevention (DLP) – Extend DLP to external users, WebApps, APIs, and the datastores behind them.

Imperva Data Security Fabric is a unified Data Security platform that brings together new and existing data security controls to fill the gaps created by the changing data landscape. By providing full visibility into data access across all data stores, it is easier for data security, compliance, governance, and privacy teams to manage the lifecycle around sensitive and business-critical data, no matter where it resides. The key benefit of this unified, data-centric approach is it enables these teams to understand and mitigate risky data access and practices before they turn into a data breach.

-

Enable the SOC with data-centric telemetry

The final part of the data security puzzle is enabling the security operations center (SOC) to be more effective at detecting and responding to incidents from the modern data landscape. This requires the SOC to have a feed of data-centric telemetry delivered in a way that a SOC analyst can understand. AI and ML can help to detect anomalous or risky data access and provide contextualized alerts that eliminate guesswork and speeds up response. In responding to a data breach or suspected data breach, knowing the who, what, when, where, why, and how of data access is critical. With data-centric telemetry, your SOC will have these answers at their fingertips.

In the current climate, it is almost inevitable that an organization will be the victim of a data breach. It is not a case of “if” but “when.” That is why organizations must review their cybersecurity strategy now and adopt a data-centric approach. This will not only greatly reduce the likelihood of a large-scale data breach but also better prepare them to respond quickly when they are breached. As past examples have shown, the response is critical to the future health and prosperity of the organization.

Try Imperva for Free

Protect your business for 30 days on Imperva.